Breathtaking Tips About How To Check Data Integrity

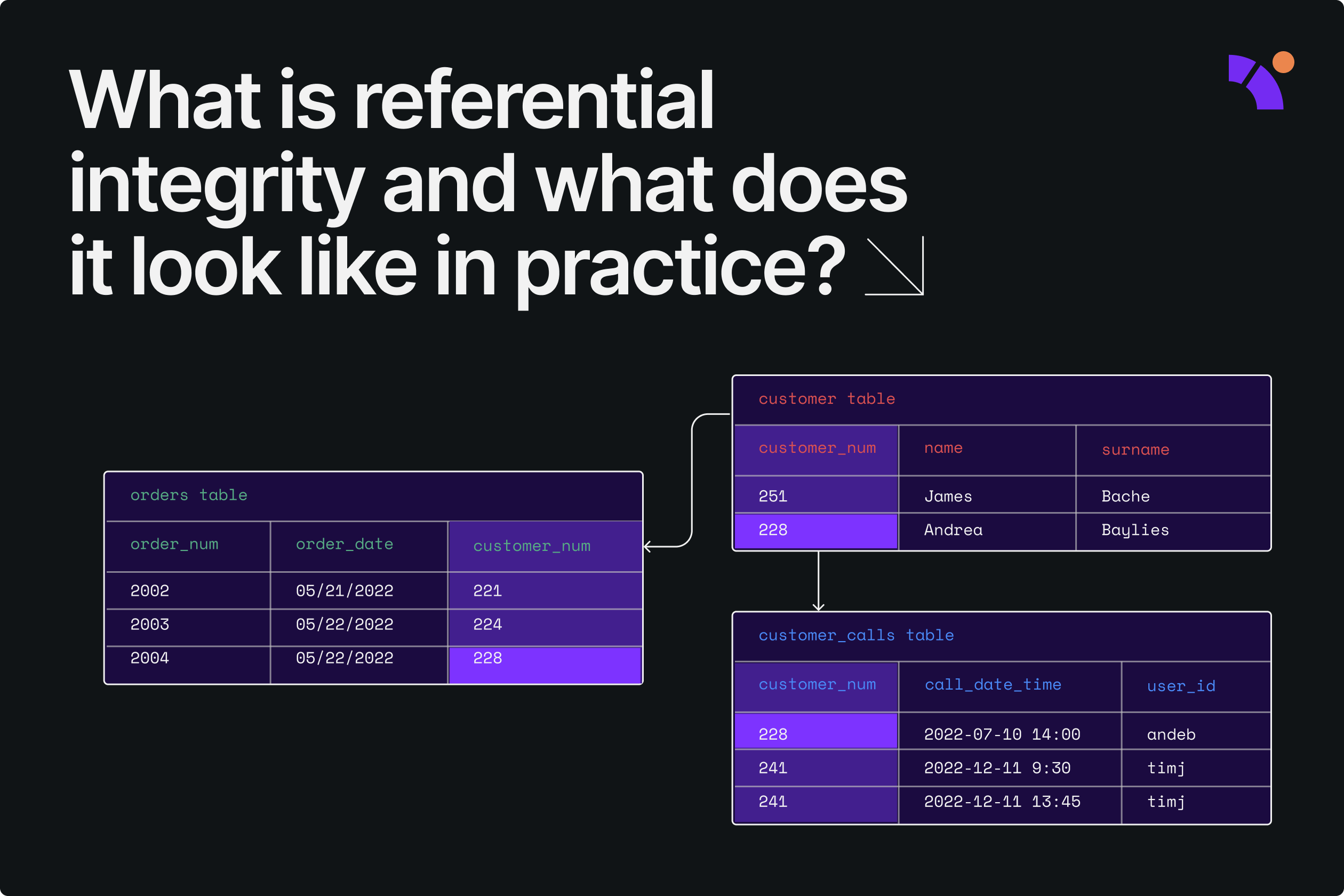

Check whether you can add, delete, or modify any data in tables.

How to check data integrity. To get the total user count within the lab. There are several different ways to answer this question. Include the policy text in init data.

How to test data integrity : Data accuracy refers to the correctness of data values and the. Ensure data is recorded exactly as intended (such.

Most stock quote data provided by bats. Since the direction will always be in the patterns of 1. Data integrity is an ongoing process that requires a daily commitment to keeping your subjects’ information safe and giving your organization’s stakeholders the.

Integrity (aka uniqueness): Let’s talk about how to make sure that your organized data is complete and accurate. Us market indices are shown in real time, except for the s&p 500 which is refreshed every two minutes.

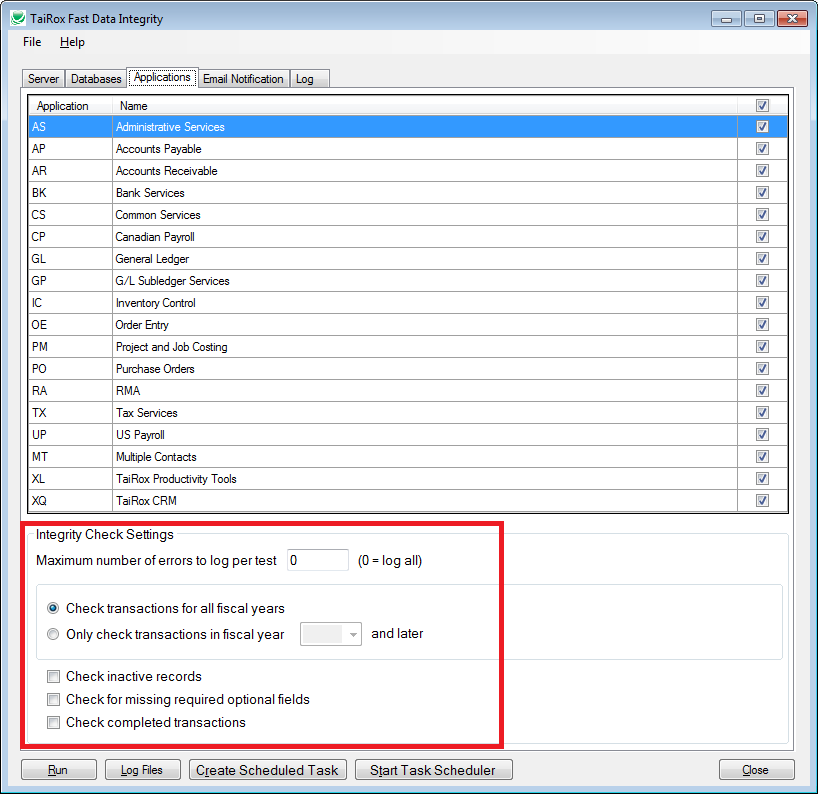

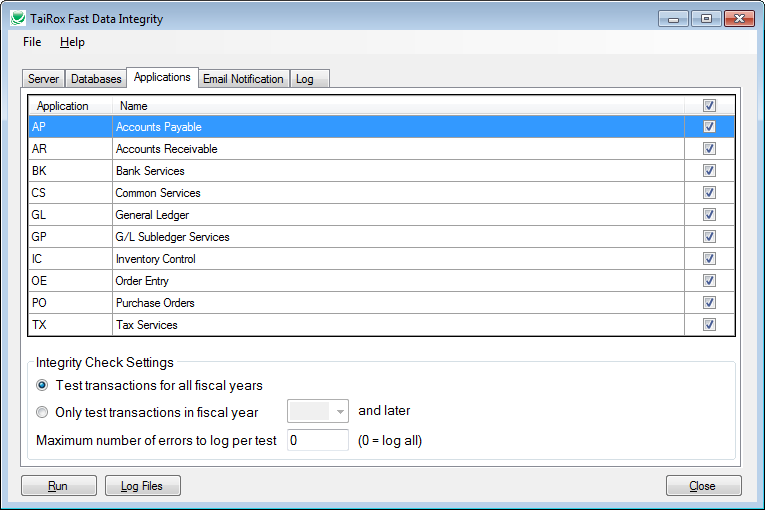

3 goals of data integrity testing. This data tool should only be. Data integrity is the opposite of data corruption.

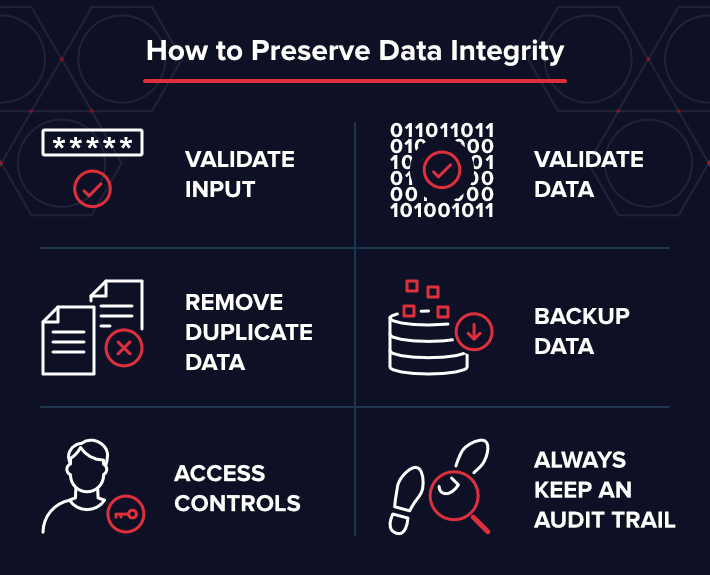

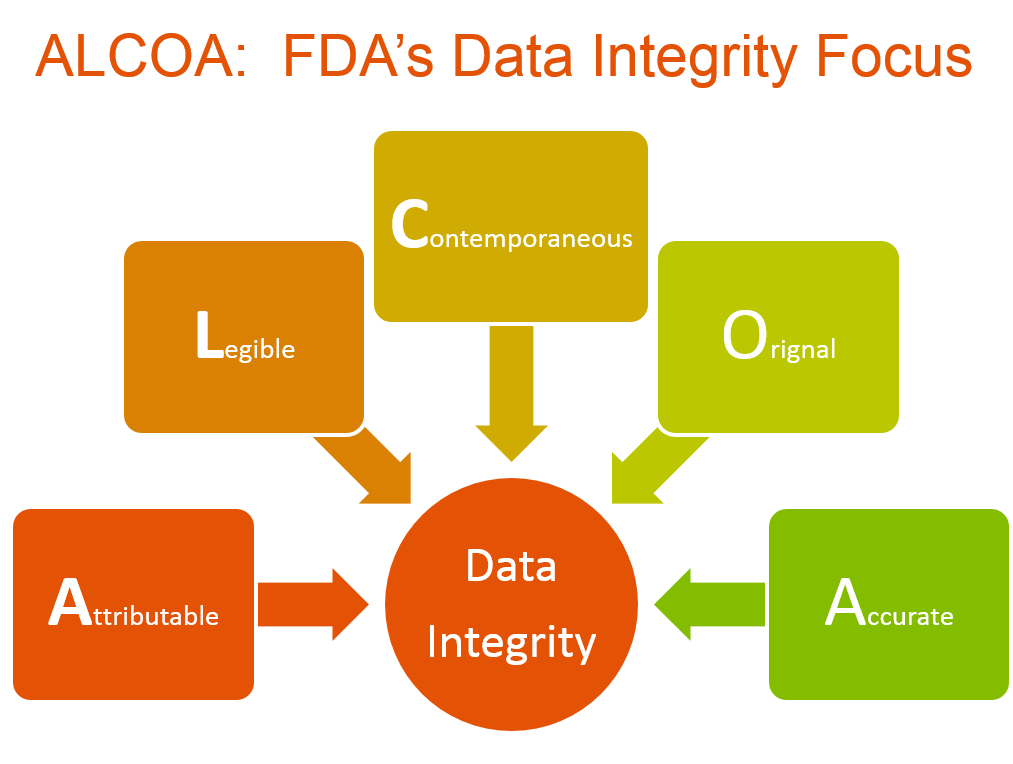

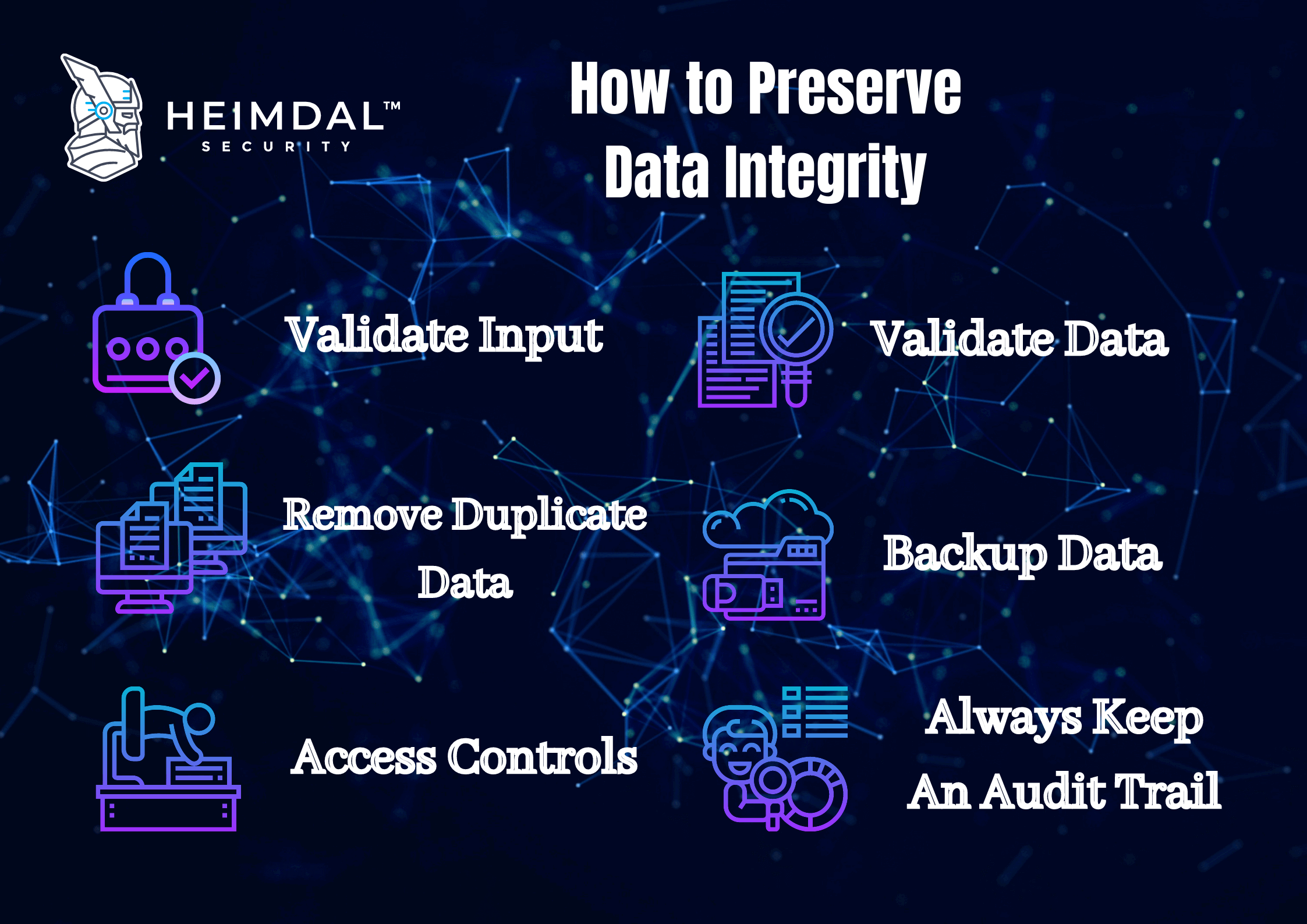

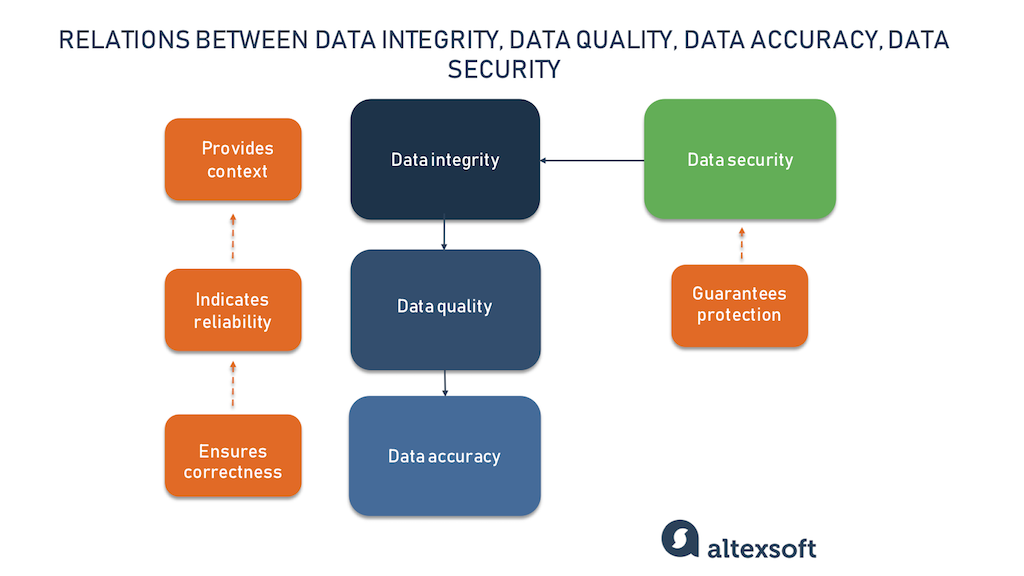

Data integrity encompasses the accuracy, reliability, and consistency of data over time. Data integrity can be examined using the following tests. Data integrity is a concept and process that ensures the accuracy, completeness, consistency, and validity of an organization’s data.

Select sum(direction) from accesslog; Verification that moved, copied, derived, and converted data is accurate and functions correctly within a single subsystem or application. Data type checks are a basic validation method for ensuring that individual characters are consistent with expected characters as defined in data storage and retrieval mechanisms.

Often, integrity issues arise when data is replicated or transferred. You can find these in settings. For example, a data type check on a numeric data field.

The best data replication tools check for errors and validate the data to ensure it is intact and unaltered. It’s pretty easy to tell if one record is complete, such as having. How to check and verify file integrity.

Organizations planning content migrations should verify file integrity and ensure files weren't corrupted in the move. Can different data sets be joined correctly to reflect a larger picture? The overall intent of any data integrity technique is the same:

![Periodic Data Integrity Check (PDIC) []](https://wiki.cloudbacko.com/lib/exe/fetch.php?media=public:features:periodic-data-integrity-check:cbk-pdic-05.png)